More and more frequently, discussions turn to so-called Hate Speech – offensive and hateful posts by individual users – when divisive issues come up in social media or online commentary sections. Without sufficient moderation, this hate speech can quickly lead to an escalation or inhibition of discussions. Therefore, operators of such platforms are advised to identify and moderate this hateful communication. However, due to the large amounts of data and rapidity of communication, this proves elusive. Especially the discussion about the accommodation of refugees since 2015 strengthened the assumption that hate speech in social media does not only pose a threat for individuals but also for society as a whole since hateful communication can be linked to the political advancement of right-wing extremist parties, political apathy, and racist crimes.

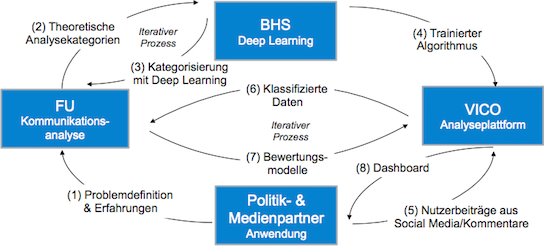

The three-year joint project NOHATE aims to analyse hateful communication on social media platforms, in online forums and commentary sections in order to identify underlying causes and dynamics as well as develop methods and software for (early) recognition of hateful communication and potential strategies for de-escalation. A case study will offer a multidimensional perspective on displacement and migration and provide data for software development.

The partners in the joint project are Freie Universität Berlin, Beuth University of Applied Sciences Berlin and VICO Research & Consulting. The project is funded by the Federal Ministry of Education and Research (BMBF) within the context of the funding initiative "Strengthening solidarity in times of crises and change".

The Beuth University's main task within the project is to transfer the theoretically founded category system into a self-learning algorithm and to test and improve this algorithm.

Second Workshop in Cooperation with Practice Partners:

On Friday, 23 November 2018, the interdisciplinary project team of the BMBF-funded research project "NOHATE - Coping with Crises in Public Communication in the Field of Refugees, Migration, Foreigners" met for the second time with practice partners from politics, media, business and civil society. Hosted by Weizenbaum Institute for Networked Society, first results from the subprojects were presented and current developments discussed.

Further information on the worksop can be found at here.

Publications

Betty van Aken, Julian Risch, Ralf Krestel, Alexander Löser, “Challenges for Toxic Comment Classification: An In-Depth Error Analysis” 2nd Workshop on Abusive Language Online @ EMNLP 2018 [PDF].

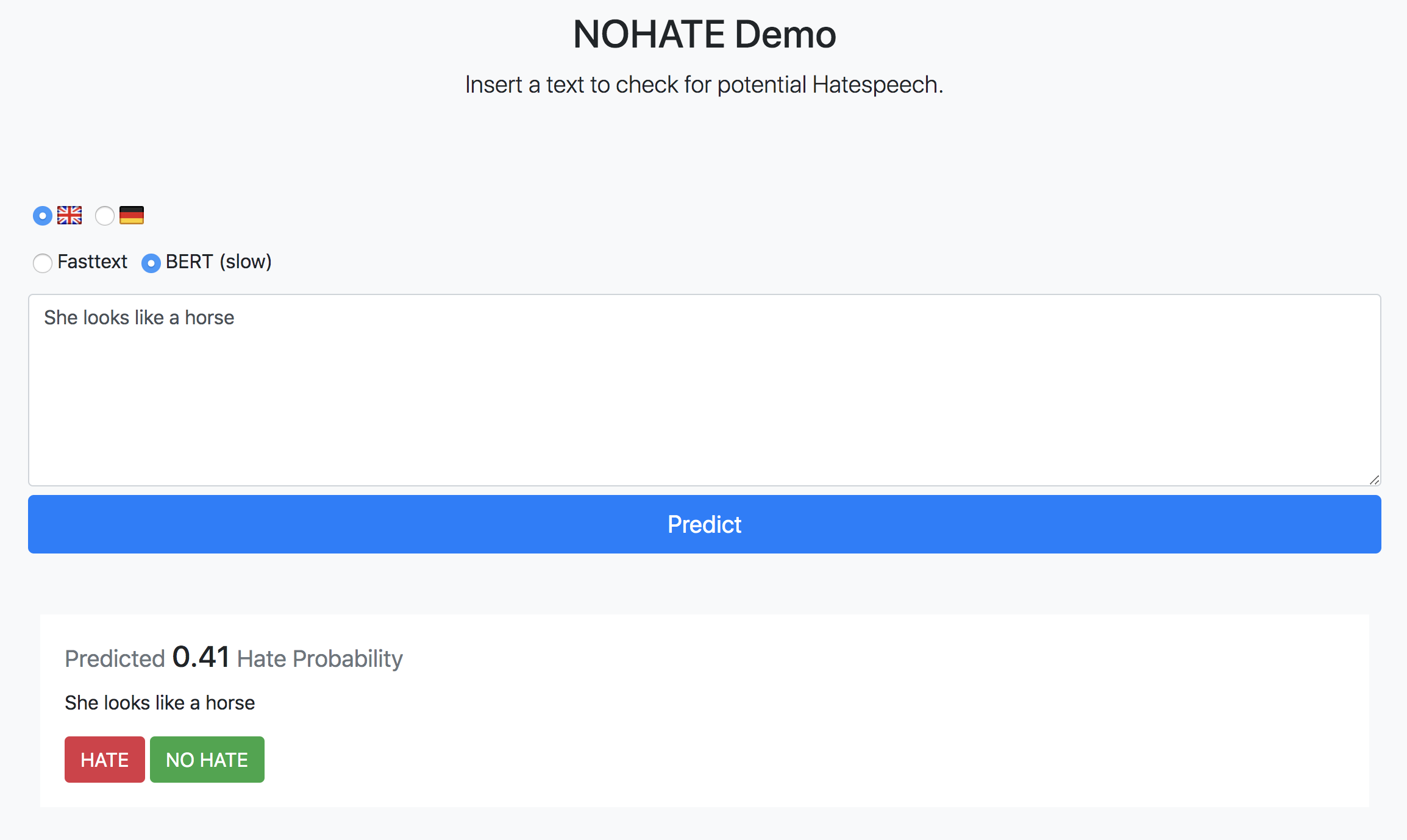

NOHATE Demo

The NOHATE Demo shows how our classifiers perform on different hate and non-hate examples. By correcting the prediction of the classifiers, they can learn new patterns within the data and improve over time.

Read More

"Deep Learning zur Klassifizierung von Hate Speech: Zwischenergebnisse des NOHATE Forschungsprojekts", Hanna Gleiß, January 12, 2019

"Wie der Bund Hasskommentaren im Internet entgegenwirken will", Frederik Tebbe, Feburary 2, 2019